Alexandra did her undergraduate in Molecular Biotechnology at the Technical University of Munich and worked on a project related to familial ALS during the MSc in Translational Neuroscience at Imperial College London. She has started her PhD at the Max-Planck-Institute of Neurobiology in Munich.

‘What was once thought can never be unthought’is one of the most famous quotes from ‘The Physicists’ written by Friedrich Dürrenmatt. Published in 1963, it treatises the responsibility of science. Even though its historical background is the Cold War, the topic of science responsibility towards society is still extremely relevant nowadays. At the crossroads between science and society, the word ‘responsibility’includes a plethora of different aspects, from the application of scientific findings to the correctness and the reproducibility of scientific research, and beyond. Here we will briefly discuss the case of neuroimaging in a legal context, reproducibility and prevention of misconduct.

When neuroimaging enters the court

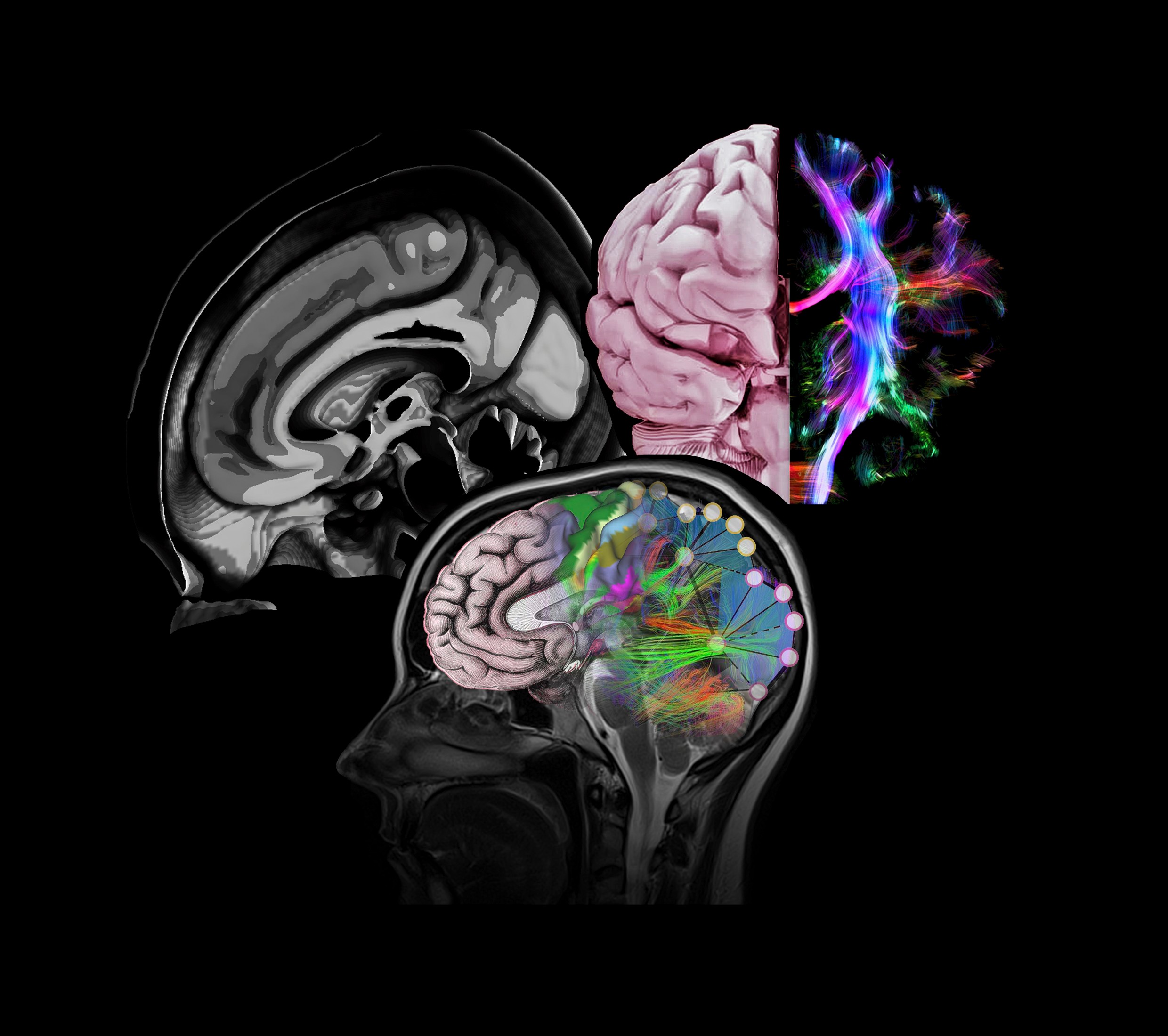

Neuroimaging findings have been recently used for dangerousness assessments in criminal trials as part of the psychiatric testimony (Gkotsi and Gasser, 2016). Neuroimaging is a term that includes different methods to visualise the structure of the brain or its responses to tasks. A widely used imaging neuroimaging technique is fMRI, which relates neuronal activity, either within a task or a resting state, to neuronal networks by measuring the change in oxygen in the blood. These changes (known as BOLD, an acronym for blood oxygen dependent contrast) are supposed to reflect changes in neuronal activity in the areas they occur.

However, fMRI still has some limitations. One of them is its limited temporal resolution (Ugurbil, 2016). Another one is linked to the fact that the measurement of neuronal activity is indirect, as it relies on changes in the blood supply of certain areas. For this reason, imaging data should be used very carefully, especially since, at first glance, they might look like ‘objective criteria’ to determine a person’s dangerousness (Gkotsi and Gasser, 2016). As easy explanations are tempting in times with rising public fears, Gkotsi and Gasserwarn about an ‘instrumentalisation of neuroscience in the interest of public safety’.

In addition to this, trying to relate features of dangerousness, like violence, to a single biological cause is a ‘simplistic falsity’ as it negotiates the social influences on an individual (Pustilnik, 2008). On top of that, the legal and social definition of what is seen as a violent behavior itself changed over time, making explanations of dangerousness even more complex. With our brains being extremely interconnected, it is not possible to relate something which is as complicated on different levels as violence only to a single brain region (Pustilnik, 2008). Even if certain brain regions like the prefrontal cortex, which is the anterior part of the brain, relate to ‘social control’, they are also linked to ‘problem solving’ or ‘pain processing’,and they are always integrated in a wider network (Onget al., 2018). Moreover, functional-anatomical correlations does not inform us about causality (Pustilnik, 2008).

Furthermore, this would also lead to the danger of stigmatisation of people with neurological or psychiatric disorders (Gkotsi and Gasser, 2016). For example, schizophrenia could be related to an ‘absence/diminishment of criminal responsibility’ in a systematic review (Tsimplouliset al., 2018). However, the same review states that ‘many individuals with schizophrenia do not meet the legal criteria for an insanity plea’. This serves as a reminder for a careful approach to every individual case since a psychiatric condition per seis not a factor for committing. Stigmatisation has negative effects: a large-scale study showed that, independently from the symptoms, only the diagnosis of schizophrenia lead to the diagnosed person being described as more ‘aggressive’, ‘dangerous’and less ‘trustworthy’(Imhoff, 2016). Moreover, the diagnosis of a risk of developing schizophrenia was related to stigmatisation, although more information about the actual risk of developing the psychosis could reduce the stigma (Yanget al., 2013).

Reproducibility and prevention of misconduct

Science needs to be reliable and reproducible. In 2016, a Naturesurvey conducted among more than 1500 researchers showed that more than 70% of them were unable to reproduce their own or another person’s findings (Baker, 2016). This does not necessarily mean that science is facing a crisis (Fanelli, 2018), keeping in mind that reproducibility issues can help to develop the state of knowledge by detecting hidden variables (Redishet al., 2018). Still several researchers appeal to take actions to ensure responsibility when possible. Those include publishing of methods in more detail as well as publishing negative results in conditions not used for the final experiment that gave the expected results, as this would also help other scientists (Drucker, 2016). Another suggestion would be to introduce a‘reproducibility index’for senior researchers as an easy way to show how many of their findings have been reproduced by another group (Drucker, 2016). Moreover, other scientists suggested that it should be easier to publish retractions while distinguishing between scientific misconduct and the recognition of being wrong due to later experiments (Flier, 2017). It was also pointed out that the peer-reviewing process should be publicly available for the benefit of the whole community as other scientists could potentially profit from the suggestions made within this process (Flier, 2017). In addition, work of reviewers would be acknowledged while professional conflicts would be made obvious. During this process, it should be made sure that strict reviewers are not made target of harassment of disappointed authors(Flier, 2017).

To maintain responsible science, prevention of misconduct is another essential aspect. Another Naturesurvey conducted on more than 3,200 scientists found out that ‘slightly more than half of non-PIs said they had often or occasionally felt pressured to produce a particular result in the past year’(van Noorden, 2018), and‘Group and authority pressure’was one of the pitfalls named for research misconduct (Gunsalus and Robinson, 2018). More training was advocate to sensibilise young scientist on the theme of research misconduct and assessing the research climate in the lab as a further possible tools preventing this issue (Gunsalus and Robinson, 2018).

Conclusions

Overall, raising awareness of these problems in scientific research is the first step towards a more responsible scientific and social environment. Together with the suggestions made by different scientific groups, including more scientific training and critical reflection of their own research, everyone can contribute to this goal.

In ‘21 points to the Physicists’, Dürrenmatt stated that ‘the content of physics is the concern of physicists, its effect the concern of all men.’ As this is not only true for physics but for scientific research as a whole, responsibility should be a key characteristic of science.

References

Baker, M (2016): 1,500 scientists lift the lid on reproducibility. Nature 533 (7604), 452–454.

Drucker, DJ (2016): Never Waste a Good Crisis. Confronting Reproducibility in Translational Research. Cell metabolism 24 (3), 348–360.

Dürrenmatt, F (1963): The physicists. A play in 2 acts.

Fanelli, D (2018): Opinion. Is science really facing a reproducibility crisis, and do we need it to? Proceedings of the National Academy of Sciences of the United States of America 115 (11), 2628–2631.

Flier, JS (2017): Irreproducibility of published bioscience research. Diagnosis, pathogenesis and therapy. Molecular metabolism 6 (1), 2–9.

Gkotsi, GM; Gasser, J (2016): Neuroscience in forensic psychiatry. From responsibility to dangerousness. Ethical and legal implications of using neuroscience for dangerousness assessments. International journal of law and psychiatry 46, 58–67.

Gunsalus, CK; Robinson, AD (2018): Nine pitfalls of research misconduct. Nature 557 (7705), 297–299.

Imhoff, R (2016): Zeroing in on the Effect of the Schizophrenia Label on Stigmatizing Attitudes. A Large-scale Study. Schizophrenia bulletin 42 (2), 456–463.

Ong, W-Y; Stohler, CS; Herr, DR (2018): Role of the Prefrontal Cortex in Pain Processing. Molecular neurobiology.

Pustilnik, AC (2008): Violence on the brain: a critique of neuroscience in criminal law. Harvard Law School Faculty Scholarship Series.Paper 14

Redish, AD; Kummerfeld, E; Morris, RL; Love, AC (2018): Opinion. Reproducibility failures are essential to scientific inquiry. Proceedings of the National Academy of Sciences of the United States of America 115 (20), 5042–5046.

Tsimploulis, G; Niveau, G; Eytan, A; Giannakopoulos, P; Sentissi, O (2018): Schizophrenia and Criminal Responsibility. A Systematic Review. The Journal of nervous and mental disease 206 (5), 370–377.

Ugurbil, K (2016): What is feasible with imaging human brain function and connectivity using functional magnetic resonance imaging. Philosophical transactions of the Royal Society of London. Series B, Biological sciences 371 (1705).

van Noorden, R (2018): Some hard numbers on science’s leadership problems. Nature 557 (7705), 294–296.

Yang, LH; Anglin, DM; Wonpat-Borja, AJ; Opler, MG; Greenspoon, M; Corcoran, CM (2013): Public stigma associated with psychosis risk syndrome in a college population. Implications for peer intervention. Psychiatric services (Washington, D.C.) 64 (3), 284–288.

Picture credits: Wellcome Collection (picture by Gabriel González-Escamilla)